Description

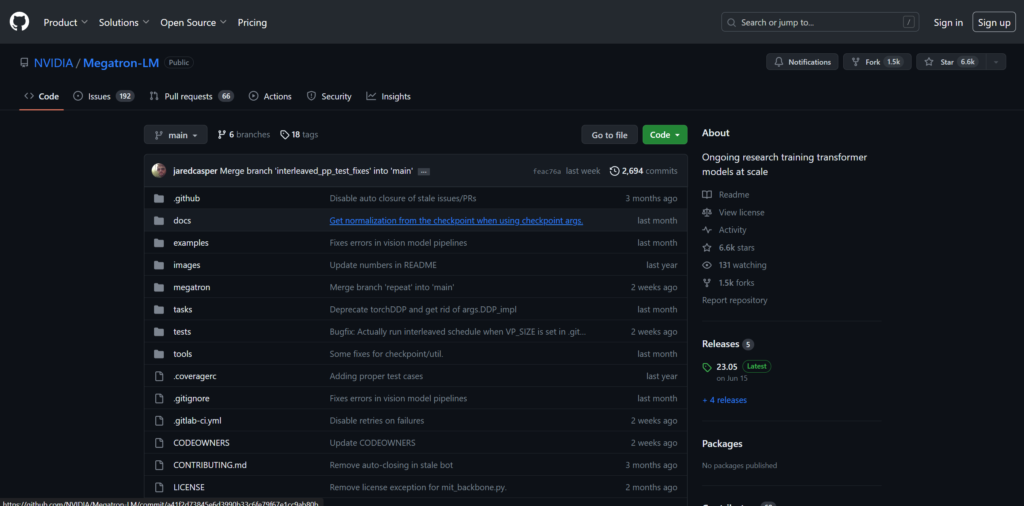

Megatron, offered in three iterations (1, 2, and 3), is a robust and high-performance transformer model developed by NVIDIA's Applied Deep Learning Research team. This initiative aims to advance research in the realm of large transformer language models. Megatron has been designed to facilitate the training of these models at a grand scale, making it a valuable asset for numerous applications. Key Highlights: Efficient Model Parallelism: Megatron incorporates model-parallel techniques for tensor, sequence, and pipeline processing. This efficiency ensures smooth and scalable model training, especially in scenarios involving large transformer models like GPT, BERT, and T5.Mixed Precision: Megatron embraces mixed precision to enhance the training of large-scale language models. This strategy optimizes the utilization of hardware resources for more efficient performance. Mixed Precision: Megatron embraces mixed precision to enhance the training of large-scale language models. This strategy optimizes the utilization of hardware resources for more efficient performance. Projects Utilizing Megatron: Megatron has been applied in a wide array of projects, demonstrating its versatility and contribution to various domains. Some notable projects include: Studies on BERT and GPT Using MegatronBioMegatron: Advancements in Biomedical Domain Language ModelsEnd-to-End Training of Neural Retrievers for Open-Domain Question AnsweringLarge Scale Multi-Actor Generative Dialog ModelingLocal Knowledge Powered Conversational AgentsMEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B BioMegatron: Advancements in Biomedical Domain Language ModelsEnd-to-End Training of Neural Retrievers for Open-Domain Question AnsweringLarge Scale Multi-Actor Generative Dialog ModelingLocal Knowledge Powered Conversational AgentsMEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B End-to-End Training of Neural Retrievers for Open-Domain Question AnsweringLarge Scale Multi-Actor Generative Dialog ModelingLocal Knowledge Powered Conversational AgentsMEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Large Scale Multi-Actor Generative Dialog ModelingLocal Knowledge Powered Conversational AgentsMEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Local Knowledge Powered Conversational AgentsMEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B MEGATRON-CNTRL: Controllable Story Generation with External KnowledgeAdvancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Advancements in the RACE Reading Comprehension Dataset LeaderboardTraining Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Training Question Answering Models From Synthetic DataDetecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Detecting Social Biases with Few-shot Instruction PromptsExploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Exploring Domain-Adaptive Training for Detoxifying Language ModelsLeveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B Leveraging DeepSpeed and Megatron for Training Megatron-Turing NLG 530B NeMo Megatron: Megatron finds application in NeMo Megatron, a comprehensive framework designed to address the complexities of constructing and training advanced natural language processing models with billions or even trillions of parameters. This framework is particularly beneficial for enterprises engaged in large-scale NLP projects. Scalability: Megatron's codebase is well-equipped to efficiently train massive language models boasting hundreds of billions of parameters. These models exhibit scalability across various GPU setups and model sizes. The range encompasses GPT models with parameters ranging from 1 billion to a staggering 1 trillion. The scalability studies utilize the Selene supercomputer by NVIDIA, involving up to 3072 A100 GPUs for the most extensive model. The benchmark results showcase impressive linear scaling, emphasizing the performance capabilities of Megatron.

Product Video

Categories

Links

Promote

Customer Reviews

Review

Write a ReviewThere are no reviews yet.

Leave a Review

Similar AI Tools

Popular Replit

0.0 0 reviewsPopular Tabnine

0.0 0 reviewsPopular MutableAI

0.0 0 reviews